English

/

English

/  Japanese

Japanese

English

/

English

/  Japanese

Japanese

Here is an experiment to implement OpenFlow functionality to Arista box by using Open vSwitch running userspace mode. I believe the accomplished OpenFlow implementation will come out soon from Arista, but not yet, in July 2011. So I just want to build the OpenFlow capable environment for my lab work.

note : This implementation does not use the hardware switching feature, then the performance is so poor. It is just a experimental result until full-featured OpenFlow implementation will be released. Anyway, it makes 48 ports OpenFlow switch. Limited performance does not matter for me!

Yutaka Yasuda (Arista Square)

29/July/2011 : first release. tested on the model 7048T with EOS 4.6.2 and Open vSwitch 1.1.1.

02/Aug/2011 : a note for kernel module added.

1. Abstract

1.1 Layout and Running Environment

2. Configuration

2.1 Open vSwitch Installation

2.2 Ports Configuration

3. Starting Up

3.1 Controller

3.2 Start the OpenFlow daemon

3.3 Status check

4. Performance Check

4.1 Evaluation by iperf

4.2 Notice for this Evaluation

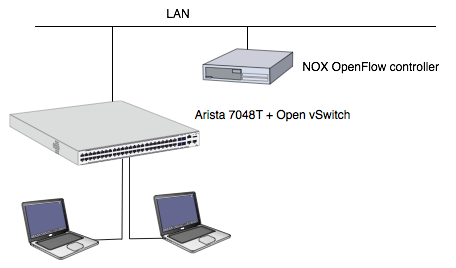

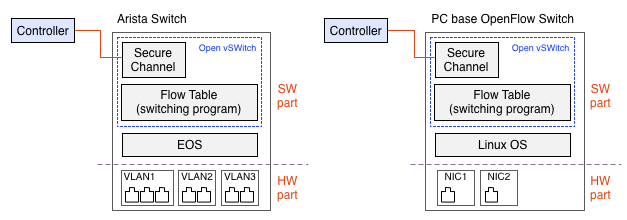

Figure 1. shows the layout of this experiment. Open vSwitch is running userspace mode on the Arista box and it makes the box as OpenFlow switch. And NOX is running on a PC as the external OpenFlow Controller.

Fig 1. The structure of this experiment

This experiment has some limitations. First, this layout is unsuitable to gain the performance. See 4.2 Notice for this Evaluation for more detail.

Second, OpenFlow daemon of Open vSwitch suite (ovs-openflowd) uses TUN/TAP to watch switch ports. Luckily, Arista has tun kernel module but it is not applicable to switch ports directly but it works for VLAN interface. Therefore, this layout configures each VLAN as an unit, not each port. 2.2 Ports Configuration also explain about this issue.

For your information, here is the version info of EOS and Linux of the tested box.

localhost>show version Arista DCS-7048T-4S-F Hardware version: 02.02 Serial number: JSH09494949 System MAC address: 001c.730c.0c0c Software image version: 4.6.2 Architecture: i386 Internal build version: 4.6.2-365580.EOS462 Internal build ID: 92d7cfd3-379e-4e05-b1f3-73b79cde051d ....(snipped) localhost>enable localhost#bash Arista Networks EOS shell [admin@localhost ~]$ uname -a Linux localhost 2.6.32.23.Ar-359566.EOS462-i386 #1 SMP PREEMPT Fri Feb 18 12:51:54 EST 2011 i686 athlon i386 GNU/Linux [admin@localhost ~]$

To make Open vSwitch binaries for Arista, it needs to prepare 32bit Linux system on the PC. Then get openvswitch-1.1.1.tar.gz from http://openvswitch.org/ and built it. The building operation are so easy in almost case. Here are the steps to follow;

# tar xzf openvswitch-1.1.1.tar.gz # cd openvswitch-1.1.1 # ./boot.sh # ./configure # ./make # ./make install

Only ovs-openflowd and ovs-ofctl files under the utilities directory will be required to run OpenFlow function. Just copy them to Arista's directory wherever you want.

And ovs-openflowd needs a directory to run itself. Make it as follows.

# /bin/mkdir -p /usr/local/var/run/openvswitch #

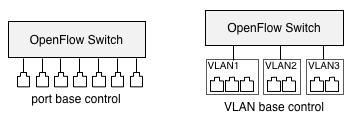

Typical OpenFlow switch including Open vSwitch controls each port separately but this configuration, it can control each VLAN, not port. ( see the section Limitation of 1.1 Layout and Running Environment too. )

Fig 2. Port base control (left) and VLAN base control (right)

But practically, there is no differences. Just needs to make each VLAN for every single port.

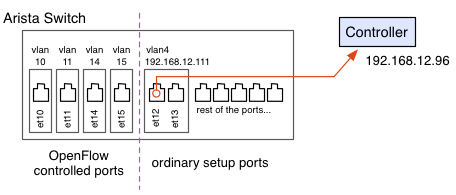

In this example, we assign 4 switch ports for Open Flow control. et10, et11, et14 and et15 are. The rest of ports still remains as EOS managed. But in our environment, we need one more VLAN to connect the controller in the private network. et12 and et13 are them.

Fig 3. Port configuration for this example

Followings are the steps to make a VLAN includes single switch port.

localhost#configure localhost(config)#vlan 10 localhost(config-vlan-10)#interface ethernet 10 localhost(config-if-Et10)#switchport mode access localhost(config-if-Et10)#switchport access vlan 10 localhost(config-if-Et10)#spanning-tree mode none localhost(config-if-Et10)#no shutdown localhost(config)#vlan 10 localhost(config-vlan-10)#name vlan10 localhost(config-vlan-10)#interface vlan 10 localhost(config-if-Vl10)#spanning-tree mode none localhost(config-if-Vl10)#no shutdown << don't forget this localhost(config-if-Vl10)# localhost(config-if-10)#show vlan configured-ports VLAN Name Status Ports ----- -------------------------------- --------- ------------------------------- 1 default active Et1, Et2, Et3, Et4, Et5, Et6 ..... 10 vlan10 active Et10 localhost(config-vlan-10)#

It shows VLAN10 includes only one switch port. Repeat 3 times more for et11, et14 and et15.

"no shutdown" for VLAN interface which commented in red is important. Without this process, VLAN will not see in ifconfig command on EOS/bash. It means the VLAN will not able to control by Open vSwitch software.

Next, make the connection to controller. We make a VLAN including et12 and et13 then assign the IP address that is the same segment with the controller. (There is no serious reason to assign 2 ports, do not worry about it.)

( see above example to bind ports to VLAN ) localhost(config)#interface vlan 12 localhost(config-if-Vl12)#ip address 192.168.12.111/24 localhost(config-if-Vl12)#show interfaces vlan12 Vlan12 is down, line protocol is lowerlayerdown (notconnect) Hardware is Vlan, address is 001c.730c.1e6b (bia 001c.730c.1e6b) Internet address is 192.168.12.111/24 Broadcast address is 255.255.255.255 Address determined by manual configuration MTU 1500 bytes localhost(config-if-Vl12)#show ip route << in this timing, there is no route information Codes: C - connected, S - static, K - kernel, O - OSPF, B - BGP Gateway of last resort: S 0.0.0.0/0 [1/0] via 211.9.56.33 C 211.9.56.32/29 is directly connected, Management1 localhost(config-if-Vl12)#

Above log shows as "Vlan 12 is down" and there is no route information but when you connect the real line to the port et12, it will change as follows;

localhost(config-if-Vl12)#show interfaces vlan12 Vlan12 is up, line protocol is up (connected) Hardware is Vlan, address is 001c.730c.1e6b (bia 001c.730c.1e6b) Internet address is 192.168.12.111/24 Broadcast address is 255.255.255.255 Address determined by manual configuration MTU 1500 bytes localhost(config-if-Vl12)#show ip route Codes: C - connected, S - static, K - kernel, O - OSPF, B - BGP Gateway of last resort: S 0.0.0.0/0 [1/0] via 211.9.56.33 C 192.168.12.0/24 is directly connected, Vlan12 << route information appeared C 211.9.56.32/29 is directly connected, Management1 localhost(config-if-Vl12)#

It is the time to make sure the reachability to the controller by ping or some.

localhost(config-if-Vl12)#ping 192.168.12.96 PING 192.168.12.96 (192.168.12.96) 72(100) bytes of data. 80 bytes from 192.168.12.96: icmp_seq=1 ttl=64 time=1.58 ms 80 bytes from 192.168.12.96: icmp_seq=2 ttl=64 time=0.115 ms 80 bytes from 192.168.12.96: icmp_seq=3 ttl=64 time=0.130 ms ^C

In this example, we start the controller as a simple L2 MAC learn switch.

Specify pyswitch or switch for NOX as follows;

# pwd /home/hoge/nox/build/src # ./nox_core -v -i ptcp:6633 pyswitch [root@pavilion src]# ./nox_core -v -i ptcp:6633 00001|nox|INFO:Starting nox_core (/home/ylb/nox/build/src/.libs/lt-nox_core) 00002|kernel|DBG:Assigned a new UUID for the container: 8351905445277066603 00003|pyrt|DBG:Loading a component description file 'nox/coreapps/examples/t/meta.json'. ...

Start installed command ovs-openflowd on Arista. To run it as userspace mode, specify the bridge name (br0) with 'netdev' and ports (vlan10 and more) as follows. ( See manual for more detail about option. And INSTALL.userspace file helpful too. )

# ovs-openflowd netdev@br0 --ports=vlan10,vlan11,vlan14,vlan15 tcp:192.168.12.96 Jul 29 03:19:54|00001|openflowd|INFO|Open vSwitch version 1.1.1 Jul 29 03:19:54|00002|openflowd|INFO|OpenFlow protocol version 0x01 Jul 29 03:19:54|00003|ofproto|INFO|using datapath ID 0000002320d34d60 Jul 29 03:19:54|00004|rconn|INFO|br0<->tcp:192.168.12.96: connecting... Jul 29 03:19:54|00005|rconn|INFO|br0<->tcp:192.168.12.96: connected Jul 29 03:19:54|00006|ofp_util|WARN|received Nicira extension message of unknown type 8 Jul 29 03:19:54|00007|ofp_util|INFO|normalization changed ofp_match, details: Jul 29 03:19:54|00008|ofp_util|INFO| pre: wildcards=0xffffffff in_port= 0 dl_src=00:00:00:00:00:00 dl_dst=00:00:00:00:00:00 dl_vlan= 0 dl_vlan_pcp= 0 dl_type= 0 nw_tos= 0 nw_proto= 0 nw_src= 0 nw_dst= 0 tp_src= 0 tp_dst= 0 Jul 29 03:19:54|00009|ofp_util|INFO|post: wildcards= 0x23fffff in_port= 0 dl_src=00:00:00:00:00:00 dl_dst=00:00:00:00:00:00 dl_vlan= 0 dl_vlan_pcp= 0 dl_type= 0 nw_tos= 0 nw_proto= 0 nw_src= 0 nw_dst= 0 tp_src= 0 tp_dst= 0

Now, all machines connected to port et10, et11, et14, et15 are all reachable to each other. Confirm it to ping or some.

And if everything is okay, ovs-ofctl command shows flow table or port status.

ovs-ofctl command shows the list of configured port by OpenFlow daemon.

# ovs-ofctl show br0 OFPT_FEATURES_REPLY (xid=0x1): ver:0x1, dpid:0000000000001000 n_tables:2, n_buffers:256 features: capabilities:0x87, actions:0xfff 1(vlan10): addr:00:1c:73:0c:1e:6b, config: 0, state:0 2(vlan11): addr:00:1c:73:0c:1e:6b, config: 0, state:0 3(vlan15): addr:00:1c:73:0c:1e:6b, config: 0, state:0 4(vlan14): addr:00:1c:73:0c:1e:6b, config: 0, state:0 LOCAL(br0): addr:c2:d0:54:26:1a:f5, config: 0x1, state:0x1 current: 10MB-FD COPPER OFPT_GET_CONFIG_REPLY (xid=0x3): frags=normal miss_send_len=0 #

Curiously, the port number (1-4) assigned randomly. But it is important number for the controller programming side. I cannot understand why... your opinion is most welcome, please.

Here is the result of the flow table when two machines communicate each other. One PC connected to et10 and the other to et11 then did ping. To help to understand, protocol type, the port number 1 (vlan10 = et10) and 2 (vlan11 = et11) and actions are marked red.

# ovs-ofctl dump-flows br0 NXST_FLOW reply (xid=0x4): cookie=0x0, duration=31.176s, table_id=0, n_packets=1, n_bytes=60, idle_timeout=53,arp,in_port=2,vlan_tci=0x0000,dl_src=00:d0:59:09:e3:9a,dl_dst=00:03:47:89:8f:e5,nw_src=0.0.0.0,nw_dst=0.0.0.0,opcode=0 actions=output:1 cookie=0x0, duration=76.176s, table_id=0, n_packets=78, n_bytes=7644, idle_timeout=53,icmp,in_port=2,vlan_tci=0x0000,dl_src=00:d0:59:09:e3:9a,dl_dst=00:03:47:89:8f:e5,nw_src=192.168.13.101,nw_dst=192.168.13.100,icmp_type=0,icmp_code=0 actions=output:1 cookie=0x0, duration=76.178s, table_id=0, n_packets=78, n_bytes=7644, idle_timeout=53,icmp,in_port=1,vlan_tci=0x0000,dl_src=00:03:47:89:8f:e5,dl_dst=00:d0:59:09:e3:9a,nw_src=192.168.13.100,nw_dst=192.168.13.101,icmp_type=8,icmp_code=0 actions=output:2 cookie=0x0, duration=31.175s, table_id=0, n_packets=1, n_bytes=60, idle_timeout=53,arp,in_port=1,vlan_tci=0x0000,dl_src=00:03:47:89:8f:e5,dl_dst=00:d0:59:09:e3:9a,nw_src=0.0.0.0,nw_dst=0.0.0.0,opcode=0 actions=output:2 #

See 4.2 Notice for this Evaluation about the limitation of the performance issue.

We use iperf benchmark tool with two 1Gbps interface Macintosh. It shows the average bandwidth for 10 seconds TCP data transfer.

Sure, it is the best case data (champion data) of the throughput measurement, of course. And I do not chase what is the worst case cause the best number itself is not so high. In real traffic, there are a lot of short flows then the throughput should be lower Mbps.

We measured the number of the upper limit of the measurement environment. Two Macintoshes had been connected to Arista (7048T)'s ordinary ports under the same L2 VLAN. The result is around 940Mpbs and it is enough to test the performance of OpenFlow.

% iperf -c 192.168.13.111 ------------------------------------------------------------ Client connecting to 192.168.13.111, TCP port 5001 TCP window size: 129 KByte (default) ------------------------------------------------------------ [ 3] local 192.168.13.110 port 49242 connected with 192.168.13.111 port 5001 [ ID] Interval Transfer Bandwidth [ 3] 0.0-10.0 sec 1.09 GBytes 940 Mbits/sec %

Here is the result when two Macs are connected between OpenFlow controlled ports of Arista. Throughput is around 150Mbps, as follows.

% iperf -c 192.168.13.111 ------------------------------------------------------------ Client connecting to 192.168.13.111, TCP port 5001 TCP window size: 129 KByte (default) ------------------------------------------------------------ [ 3] local 192.168.13.110 port 49239 connected with 192.168.13.111 port 5001 [ ID] Interval Transfer Bandwidth [ 3] 0.0-10.2 sec 191 MBytes 157 Mbits/sec %

While iperf were running, the CPU usage of ovs-openflowd process was around 80%. Here is the result of "top" command.

top - 06:55:17 up 3:52, 3 users, load average: 0.10, 0.07, 0.01 Tasks: 134 total, 5 running, 129 sleeping, 0 stopped, 0 zombie Cpu(s): 38.9%us, 22.0%sy, 0.0%ni, 27.6%id, 0.0%wa, 0.0%hi, 11.4%si, 0.0%st Mem: 2054984k total, 1284636k used, 770348k free, 95632k buffers Swap: 0k total, 0k used, 0k free, 737564k cached PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND 8536 root 20 0 2596 1212 976 R 78.8 0.1 0:18.40 ovs-openflowd 1422 root 20 0 165m 51m 18m R 29.6 2.6 63:55.00 PhyBcm54980 1386 root 20 0 155m 41m 12m R 19.9 2.1 43:17.18 Mdio 1413 root 20 0 181m 64m 26m S 7.6 3.2 23:01.05 SandCell 1354 root 20 0 172m 78m 38m S 2.0 3.9 4:34.26 Sysdb 1423 root 20 0 164m 50m 17m S 1.0 2.5 1:43.46 PhyAeluros 1355 root 20 0 171m 69m 32m S 0.7 3.5 1:53.24 Fru 1377 root 20 0 154m 40m 10m R 0.7 2.0 0:55.50 PhyEthtool 1396 root 20 0 154m 39m 9m S 0.7 2.0 0:52.62 Thermostat 8524 root 20 0 19132 10m 7900 R 0.7 0.5 0:00.56 top 1352 root 20 0 153m 30m 2136 S 0.3 1.5 0:54.25 ProcMgr-worker 1375 root 20 0 158m 45m 15m S 0.3 2.3 0:04.88 Lag+LacpAgent 1376 root 20 0 155m 42m 12m S 0.3 2.1 0:40.02 Adt7462Agent 1379 root 20 0 155m 41m 12m S 0.3 2.1 0:40.59 Smbus 1 root 20 0 2048 840 616 S 0.0 0.0 0:00.43 init 2 root 20 0 0 0 0 S 0.0 0.0 0:00.00 kthreadd 3 root RT 0 0 0 0 S 0.0 0.0 0:00.01 migration/0 4 root 20 0 0 0 0 S 0.0 0.0 0:00.03 ksoftirqd/0 5 root RT 0 0 0 0 S 0.0 0.0 0:00.00 watchdog/0

For the correct comparison, here is the result of generic PC's case. The system is Fedora 12 64bit and the CPU is Intel Core 2 duo E7200 2.53GHz. Memory is 2GB only but there is no swap outed space.

Here is the result when two Macs are connected between OpenFlow controlled ports of this PC. Throughput is around 370Mbps, as follows.

% iperf -c 192.168.13.111 ------------------------------------------------------------ Client connecting to 192.168.13.111, TCP port 5001 TCP window size: 129 KByte (default) ------------------------------------------------------------ [ 3] local 192.168.13.110 port 49259 connected with 192.168.13.111 port 5001 [ ID] Interval Transfer Bandwidth [ 3] 0.0-10.0 sec 442 MBytes 371 Mbits/sec %

The CPU usage of ovs-openflowd process was around 98%, wow. Here is the result of "top" command.

top - 16:19:00 up 6 days, 23:47, 7 users, load average: 0.33, 0.09, 0.03 Tasks: 188 total, 2 running, 186 sleeping, 0 stopped, 0 zombie Cpu(s): 13.6%us, 30.0%sy, 0.0%ni, 43.3%id, 0.7%wa, 0.0%hi, 12.4%si, 0.0%st Mem: 2047912k total, 1800656k used, 247256k free, 259136k buffers Swap: 4128760k total, 0k used, 4128760k free, 939936k cached PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND 16248 root 20 0 39572 1880 1428 R 97.6 0.1 0:32.33 ovs-openflowd 1633 root 20 0 134m 20m 9504 S 2.3 1.0 24:08.65 Xorg 2218 ylb 20 0 298m 17m 9.9m S 1.3 0.9 12:41.13 gnome-terminal 4 root 20 0 0 0 0 S 1.0 0.0 0:00.57 ksoftirqd/0 7 root 20 0 0 0 0 S 0.7 0.0 0:38.41 ksoftirqd/1 1206 root 20 0 0 0 0 S 0.3 0.0 0:32.45 kondemand/0 16086 root 20 0 883m 21m 6360 S 0.3 1.1 0:06.43 lt-nox_core 16268 root 20 0 14928 1204 864 R 0.3 0.1 0:00.11 top 1 root 20 0 4144 888 620 S 0.0 0.0 0:00.66 init 2 root 20 0 0 0 0 S 0.0 0.0 0:00.00 kthreadd 3 root RT 0 0 0 0 S 0.0 0.0 0:00.01 migration/0 5 root RT 0 0 0 0 S 0.0 0.0 0:00.00 watchdog/0 6 root RT 0 0 0 0 S 0.0 0.0 0:00.01 migration/1 8 root RT 0 0 0 0 S 0.0 0.0 0:00.00 watchdog/1 9 root 20 0 0 0 0 S 0.0 0.0 0:03.73 events/0 10 root 20 0 0 0 0 S 0.0 0.0 0:05.25 events/1 11 root 20 0 0 0 0 S 0.0 0.0 0:00.00 cpuset 12 root 20 0 0 0 0 S 0.0 0.0 0:00.00 khelper 13 root 20 0 0 0 0 S 0.0 0.0 0:00.00 netns 14 root 20 0 0 0 0 S 0.0 0.0 0:00.00 async/mgr 15 root 20 0 0 0 0 S 0.0 0.0 0:00.00 pm 16 root 20 0 0 0 0 S 0.0 0.0 0:00.25 sync_supers

As you can see, this machine is NOX controller itself but it will not make any load for iperf type traffic, such as single connection and long life flow. (actually 0.3% CPU usage)

The throughput of userspace running Open vSwitch is Arista : 157Mbps and PC : 370Mbps. Here is almost 1 : 2 ratio but Arista has AMD Athron 1.8GHz and PC uses Intel Core 2 Duo 2.5GHz. And Arista needs to use TUN/TAP interface to watch ports. So 1 : 2 result is reasonable for us.

But yes, Open vSwitch has kernel module implementation to maximize of their performance, and it is the default of them. I think it is possible to build the kernel module that fits Atrista. If kernel module version increase the performance dramatically, it has value to try it.

I measured the throughput on kernel module then it reached to 840Mbps, with pretty low CPU load. 370 : 840 ratio means Arista may be able to expand the throughput to 400Mbps or around. And so, 840Mbps might have some saturation issues but Arista seems has more headroom. For my personal usage in the lab, 500Mbps is good enough to experimental use of OpenFlow. It is time to consider seriously to build the kernel module for Arista, I think.

First of all, this experimental implementation of OpenFlow to Arista by using userspace running Open vSwitch is naturally unsuitable to gain the performance. It fully depends on the configuration of components. Not depend's on Arista's real performance.

If you are seeking real commercial and normal performance OpenFlow switch, just wait the release of official and "right" implementation of OpenFlow from Arista.

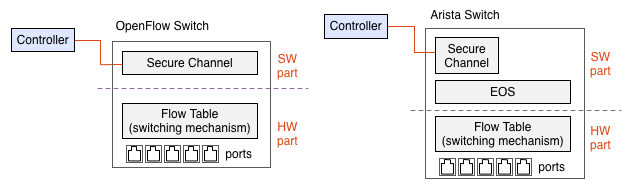

Natively, in Figure 4. left shows, OpeFlow designed for mixture of hardware and software, the hardware part has high performance switching and the software part has flexibility for selecting the route. And Arista has high performance CPU and full featured software stack on the switch natively. So it is good to implement OpenFlow essentially. (Fig 4. right)

Fig 4. Standard layout (left) and Arista's typical implementation (right)

But this time's experiment do all of jobs of OpenFlow by software, including packet forwarding process. (see Fig. 5 left) It is far from the design philosophy of OpenFlow. So this experimental implementation has severe limitation of the performance as result.

Fig 5. Experimental implement (left) and PC based implement (right)

But it is the same layout as PC based solution which is using Open vSwitch.

But even so, Open vSwithc uses kernel module technique to gain their performance and it is the default of them now. So our implementation fully depends on userspace running and TUN/TAP technique. The throughput limitation should be falling down from that.

From the performance side, there is no reason to try this approach, but there are some other reasons to do that. One is the port number. There is ver few commercial based OpenFlow switches on the market and is very expensive if it is selling on. (July, 2011) So many researchers are using PC based OpenFlow switch but it has severe limitation of the maximum number of the port. Therefore it is very good to make an Arista based OpenFlow switch if it has strict performance limitation. My Arista 7048T has 48 ports in very small space in my lab.

And It is possible to make numbers of OpenFlow switch in one box, Mininet project is good example. Mininet helps to make many switches and hosts in one host. It is also good to test your OpenFlow configuration in real traffic.